Whether it’s done consciously or subconsciously, racial discrimination continues to have a serious, measurable impact on the choices our society makes about criminal justice, law enforcement, hiring and financial lending. It might be tempting, then, to feel encouraged as more and more companies and government agencies turn to seemingly dispassionate technologies for help with some of these complicated decisions, which are often influenced by bias. Rather than relying on human judgment alone, organizations are increasingly asking algorithms to weigh in on questions that have profound social ramifications, like whether to recruit someone for a job, give them a loan, identify them as a suspect in a crime, send them to prison or grant them parole.

But an increasing body of research and criticism suggests that algorithms and artificial intelligence aren’t necessarily a panacea for ending prejudice, and they can have disproportionate impacts on groups that are already socially disadvantaged, particularly people of color. Instead of offering a workaround for human biases, the tools we designed to help us predict the future may be dooming us to repeat the past by replicating and even amplifying societal inequalities that already exist.

These data-fueled predictive technologies aren’t going away anytime soon. So how can we address the potential for discrimination in incredibly complex tools that have already quietly embedded themselves in our lives and in some of the most powerful institutions in the country?

In 2014, a report from the Obama White House warned that automated decision-making “raises difficult questions about how to ensure that discriminatory effects resulting from automated decision processes, whether intended or not, can be detected, measured, and redressed.”

Over the last several years, a growing number of experts have been trying to answer those questions by starting conversations, developing best practicesand principles of accountability, and exploring solutions for the complex and insidious problem of algorithmic bias.

Thinking critically about the data matters

Although AI decision-making is often regarded as inherently objective, the data and processes that inform it can invisibly bake inequality into systems that are intended to be equitable. Avoiding that bias requires an understanding of both very complex technology and very complex social issues.

Consider COMPAS, a widely used algorithm that assesses whether defendants and convicts are likely to commit crimes in the future. The risk scores it generates are used throughout the criminal justice system to help make sentencing, bail and parole decisions.

At first glance, COMPAS appears fair: White and black defendants given higher risk scores tended to reoffend at roughly the same rate. But an analysis by ProPublica found that, when you examine the types of mistakes the system made, black defendants were almost twice as likely to be mislabeled as likely to reoffend — and potentially treated more harshly by the criminal justice system as a result. On the other hand, white defendants who committed a new crime in the two years after their COMPAS assessment were twice as likely as black defendants to have been mislabeled as low-risk. (COMPAS developer Northpointe — which recently rebranded as Equivant — issued a rebuttal in response to the ProPublica analysis; ProPublica, in turn, issued a counter-rebuttal.)

“Northpointe answers the question of how accurate it is for white people and black people,” said Cathy O’Neil, a data scientist who wrote the National Book Award-nominated “Weapons of Math Destruction,” “but it does not ask or care about the question of how inaccurate it is for white people and black people: How many times are you mislabeling somebody as high-risk?”

An even stickier question is whether the data being fed into these systems might reflect and reinforce societal inequality. For example, critics suggest that at least some of the data used by systems like COMPAS is fundamentally tainted by racial inequalities in the criminal justice system.

“If you’re looking at how many convictions a person has and taking that as a neutral variable — well, that’s not a neutral variable,” said Ifeoma Ajunwa, a law professor who has testified before the Equal Employment Opportunity Commission on the implications of big data. “The criminal justice system has been shown to have systematic racial biases.”

Black people are arrested more often than whites, even when they commit crimes at the same rates. Black people are also sentenced more harshly and are more likely to searched or arrested during a traffic stop. That’s context that could be lost on an algorithm (or an engineer) taking those numbers at face value.

“The focus on accuracy implies that the algorithm is searching for a true pattern, but we don’t really know if the algorithm is in fact finding a pattern that’s true of the population at large or just something it sees in its data,” said Suresh Venkatasubramanian, a computing professor at the University of Utah who studies algorithmic fairness.

Biased data can create feedback loops that function like a sort of algorithmic confirmation bias, where the system finds what it expects to find rather than what is objectively there.

“Part of the problem is that people trained as data scientists who build models and work with data aren’t well connected to civil rights advocates a lot of the time,” said Aaron Rieke of Upturn, a technology consulting firm that works with civil rights and consumer groups. “What I worry most about isn’t companies setting out to racially discriminate. I worry far more about companies that aren’t thinking critically about the way that they might reinforce bias by the source of data they use.”

Understanding what we need to fix

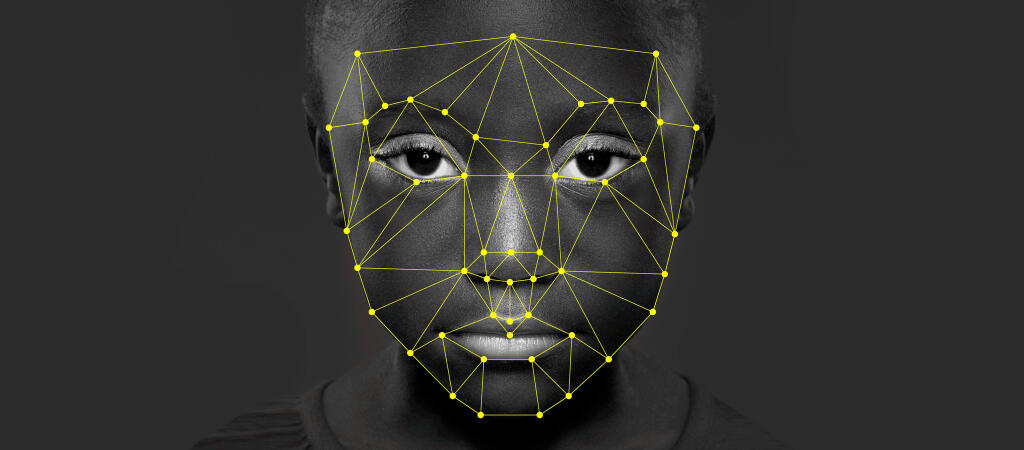

There are similar concerns about algorithmic bias in facial-recognition technology, which already has a far broader impact than most people realize: Over 117 million American adults have had their images entered into a law-enforcement agency’s face-recognition database, often without their consent or knowledge, and the technology remains largely unregulated.

A 2012 paper, which was coauthored by a technologist from the FBI, found that the facial-recognition algorithms it studied were less accurate when identifying the faces of black people, along with women and adults under 30. A key finding of a 2016 study by the Georgetown Center on Privacy and Technology, which examined 15,000 pages of documentation, was that “police face recognition will disproportionately affect African Americans.” (The study also provided models for policy and legislation that could be used to regulate the technology on both federal and state levels.)

Some critics suggest that the solution to these issues is to simply add more diversity to training sets, but it’s more complicated than that, according to Elke Oberg, the marketing manager at Cognitec, a company whose facial-recognition algorithms have been used by law-enforcement agencies in California, Maryland, Michigan and Pennsylvania.

“Unfortunately, it is impossible to make any absolute statements [about facial-recognition technology],” Oberg said. “Any measurements on face-recognition performance depends on the diversity of the images within the database, as well as their quality and quantity.”

Jonathan Frankle, a former staff technologist for the Georgetown University Law Center who has experimented with facial-recognition algorithms, can run through a laundry list of factors that may contribute to the uneven success rates of the many systems currently in use, including the difficulty some systems have in detecting facial landmarks on darker skin, the lack of good training sets available, the complex nature of learning algorithms themselves, and the lack of research on the issue. “If it were just about putting more black people in a training set, it would be a very easy fix. But it’s inherently more complicated than that.”

He thinks further study is crucial to finding solutions, and that the research is years behind the way facial recognition is already being used. “We don’t even fully know what the problems are that we need to fix, which is terrifying and should give any researcher pause,” Frankle said.

The government could step in

New laws and better government regulation could be a powerful tool in reforming how companies and government agencies use AI to make decisions.

Last year, the European Union passed a law called the General Data Protection Regulation, which includes numerous restrictions on the automated processing of personal data and requires transparency about “the logic involved” in those systems. Similar federal regulation does not appear to be forthcoming in the U.S. — the FCC and Congress are pushing to either stall or dismantle federal data-privacy protections — though some states, including Illinois and Texas, have passed their own biometric privacy laws to protect the type of personal data often used by algorithmic decision-making tools.

However, existing federal laws do protect against certain types of discrimination — particularly in areas like hiring, housing and credit — though they haven’t been updated to address the way new technologies intersect with old prejudices.

“If we’re using a predictive sentencing algorithm where we can’t interrogate the factors that it is using, or a credit scoring algorithm that can’t tell you why you were denied credit — that’s a place where good regulation is essential, [because] these are civil rights issues,” said Frankle. “The government should be stepping in.”

Transparency and accountability

Another key area where the government could be of use: pushing for more transparency about how these influential predictive tools reach their decisions.

“The only people who have access to that are the people who build them. Even the police don’t have access to those algorithms,” O’Neil said. “We’re handing over the decision of how to police our streets to people who won’t tell us how they do it.”

Frustrated by the lack of transparency in the field, O’Neil started a company to help take a peek inside. Her consultancy conducts algorithmic audits and risk assessments, and it is currently working on a manual for data scientists who want “to do data science right.”

Complicating any push toward greater transparency is the rise of machine learning systems, which are increasingly involved in decisions around hiring, financial lending and policing. Sometimes described as “black boxes,” these predictive models are so complex that even the people who create them can’t always tell how they arrive at their conclusions.

“A lot of these algorithmic systems rely on neural networks which aren’t really that transparent,” said Professor Alvaro Bedoya, the executive director of the Center on Privacy and Technology at Georgetown Law. “You can’t look under the hood, because there’s no such thing as looking under the hood.” In these cases, Bedoya said, it’s important to examine whether the system’s results affect different groups in different ways. “Increasingly, people are calling for algorithmic accountability,” instead of insight into the code, “to do rigorous testing of these systems and their outputs, to see if the outputs are biased.”

What does ‘fairness’ mean?

Once we move beyond the technical discussions about how to address algorithmic bias, there’s another tricky debate to be had: How are we teaching algorithms to value accuracy and fairness? And what do we decide “accuracy” and “fairness” mean? If we want an algorithm to be more accurate, what kind of accuracy do we decide is most important? If we want it to be more fair, whom are we most concerned with treating fairly?

For example, is it more unfair for an algorithm like COMPAS to mislabel someone as high-risk and unfairly penalize them more harshly, or to mislabel someone as low-risk and potentially make it easier for them to commit another crime? AURA, an algorithmic tool used in Los Angeles to help identify victims of child abuse, faces a similarly thorny dilemma: When the evidence is unclear, how should an automated system weigh the harm of accidentally taking a child away from parents who are not abusive against the harm of unwittingly leaving a child in an abusive situation?

“In some cases, the most accurate prediction may not be the most socially desirable one, even if the data is unbiased, which is a huge assumption — and it’s often not,” Rieke said.

Advocates say the first step is to start demanding that the institutions using these tools make deliberate choices about the moral decisions embedded in their systems, rather than shifting responsibility to the faux neutrality of data and technology.

“It can’t be a technological solution alone,” Ajunwa said. “It all goes back to having an element of human discretion and not thinking that all tough questions can be answered by technology.”

Others suggest that human decision-making is so prone to cognitive bias that data-driven tools might be the only way to counteract it, assuming we can learn to build them better: by being conscientious, by being transparent and by candidly facing the biases of the past and present in hopes of not coding them into our future.

“Algorithms only repeat our past, so they don’t have the moral innovation to try and improve our lives or our society,” O’Neil said. “But long as our society is itself imperfect, we are going to have to adjust something to remove the discrimination. I am not a proponent of going back to purely human decision-making because humans aren’t great. … I do think algorithms have the potential for doing better than us.” She pauses for a moment. “I might change my mind if you ask me in five years, though.”

Source: http://53eig.ht/2uMS4Kk