Steve Thomas and I are talking about brain implants. Bonnie Tyler’s Holding Out For a Hero is playing in the background and for a moment I almost forget that a disease has robbed Steve of his speech. The conversation breaks briefly; now I see his wheelchair, his ventilator, his hospital bed.

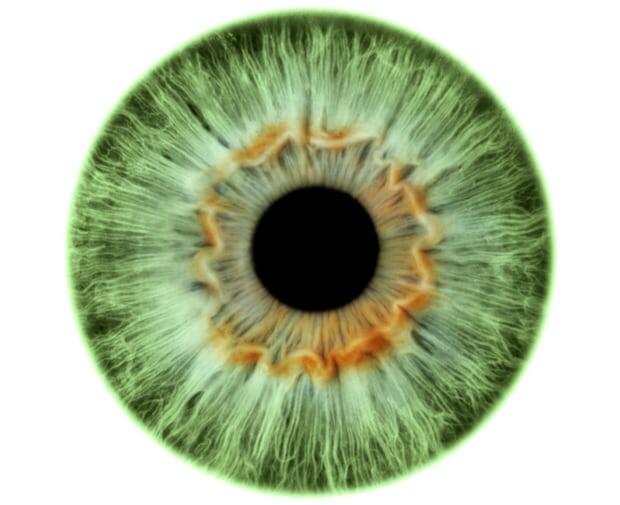

Steve, a software engineer, was diagnosed with ALS (amyotrophic lateral sclerosis, a type of motor neurone disease) aged 50. He knew it was progressive and incurable; that he would soon become unable to move and, in his case, speak. He is using eye-gaze technology to tell me this (and later to turn off the sound of Bonnie Tyler); cameras pick up light reflection from his eye as he scans a screen. Movements of his pupils are translated into movements of a cursor through infrared technology and the cursor chooses letters or symbols. A speech-generating device transforms these written words into spoken ones – and, in turn, sentences and stories form.

Eye-gaze devices allow some people with limited speech or hand movements to communicate, use environmental controls, compose music, and paint. That includes patients with ALS – up to 80% have communication difficulties, cerebral palsy, strokes, multiple sclerosis and spinal cord injuries. It’s a far cry from Elle editor-in-chief Jean-Dominique Bauby, locked-in by a stroke in 1995, painstakingly blinking through letters on an alphabet board. His memoir, written at one word every two minutes, later became a film, The Diving Bell and the Butterfly. Although some still use low-tech options (not everyone can meet the physical or cognitive requirements for eye-gaze systems; occasionally, locked-in patients can blink but cannot move their eyes), speech-to-text and text-to-speech functionality on smartphones and tablets has revolutionised communication.

Eye-gaze technology has also evolved rapidly with open source and lower-cost options, solid NHS funding, and improved accessibility – some users are as young as 13 months. Recent prototypes have implanted similar technology into smartphones and glasses. But in practice, even some newer systems allow for only eight words per minute. Technical hitches during my visit mean that Steve reaches 3 wpm at times – our average speech rate in free-flowing conversation is 190 wpm. “Eye gaze allows me to do anything from Skype my wife to playing games,” he says, “but the downside is that sometimes I can’t really join in with conversations.”

Yet Steve still sounds like Steve. He preserved his speech before it disappeared through voice banking, recording around 1,500 phrases. Now his communication device generates an infinite number of sentences from those sounds, his identity in some way preserved. Prices start at around £100 for voice-banking services; some charities offer equipment loans and financial support.

In the Netherlands, Peter Desain, chair of artificial intelligence at Donders Institute for Brain, Cognition and Behaviour, is researching brain computer interfaces (BCI), a technique where brain signals are analysed and used to control external devices. BCI recently enabled three paralysed patients elsewhere to type with their thoughts through brain implants. That system, still in early development, is slow (2-8 wpm), expensive, and can take months to learn.

But Desain’s NoiseTag method, developed at Radboud University Nijmegen, does not require surgery. Instead, users wear a headset fitted with electrodes that record brain activity. They look at a screen with a matrix of flashing characters, each tagged with a specific code. Focusing on a given character causes its signature to appear in brainwaves recorded by the headset; the corresponding character is typed, and then a device “speaks” a completed sentence. The system exploits machine learning and predicts a user’s response for each pattern; this means that limited, if any, calibration is needed – one letter can be typed every one or two seconds with 95% accuracy. Twenty patients with ALS are trialling the technology, with a spin-off aiming to scale up numbers over the next year and extend the method to the internet of things (IoT).

Recently, Facebook has announced it is stepping into the BCI ring, ready to decode neural activity within two years using a yet-to-be-developed non-invasive technique at the mysterious Building 8 in Menlo Park, California. Mark Zuckerberg believes we will soon be typing using only our thoughts at five times the speed currently managed on smartphones. In addition, Elon Musk has just founded the $100m company NeuraLink and says we will all be benefiting from its BCI technology within a decade. Others express healthy scepticism; bandwidth and biocompatibility remain significant challenges, let alone surgical risks and our still-evolving understanding of neuroscientific principles.

In the US, Darpa (the Defense Advanced Research Projects Agency) has also invested $65m in neural implants to restore speech, hearing, vision and memory in those with brain trauma and neurodegenerative disease. One group is working to wirelessly record signals from up to 1m neurons at once (currently, BCI reads around 100 neurons) by implanting tens of thousands of microdevices called neurograins across the brain. This “cortical intranet” will read out and write in information – brain enhancement might follow. Neuroprostheses have already shown promise in improving memory in rats and decision-making in monkeys; humans are another matter, of course. Perhaps that is why Kernel, a $100m start-up that aims to build “the world’s first neuroprosthesis to enhance human intelligence”, has quickly shifted focus. Founder Bryan Johnson says invasive BCI “is really interesting, but not an entry point” into a commercially viable business.

Meanwhile, Facebook has sought to dampen concerns around privacy and brain hacking: “This isn’t about decoding random thoughts. This is about decoding the words you’ve already decided to share by sending them to the speech centre of your brain. Think of it like this: you take many photos and choose to share only some of them. Similarly, you have many thoughts and choose to share only some of them.” Of course, photos not meant for sharing don’t always stay unshared …

“I already have a [prototype] BCI,” Steve tells. “My death wish is when I don’t even respond with BCI.” His words bring to mind something written by Bauby, mourning the loss of true conversation: “Sweet Florence refuses to speak to me unless I first breathe noisily into the receiver that Sandrine holds glued to my ear. ‘Are you there, Jean-Do?’ she asks anxiously over the air. And I have to admit that at times I do not know any more.”

For many like Steve, communication technology is not about sophisticated brain enhancement; it is simply about existence.

Source: http://bit.ly/2zrrPYY